Introduction to Language Models

Language models have revolutionized the way machines understand and generate human language. From early rule-based systems to today’s powerful neural networks, language models have become the backbone of many AI applications—powering virtual assistants, chatbots, translators, and more.

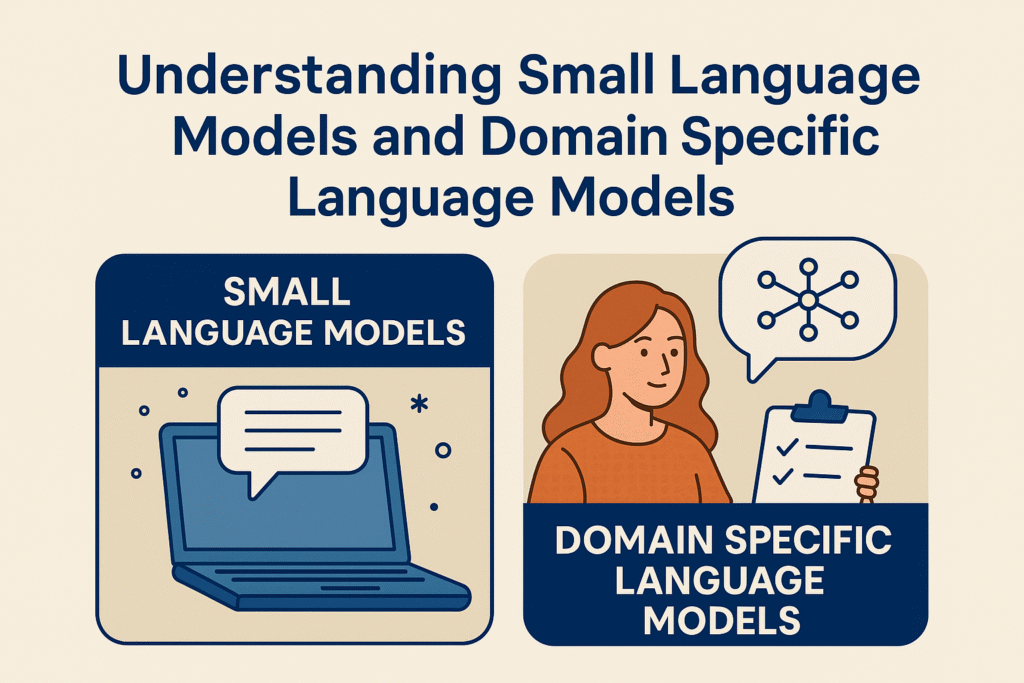

However, the field is evolving. Instead of massive general-purpose models like GPT-4, there’s a growing interest in small language models (SLMs) and domain specific language models (DSLMs). These models offer efficiency, adaptability, and precision for specialized tasks and constrained environments.

What Are Small Language Models (SLMs)?

Small language models are streamlined versions of large language models, designed to be lighter in size, faster to run, and less resource-hungry. These models typically have fewer parameters, use less memory, and are easier to deploy on devices with limited computational power.

Key Characteristics of SLMs:

- Fewer parameters (often millions instead of billions)

- Faster inference time

- Lower energy consumption

- Better suited for real-time or on-device applications

SLMs may not have the wide-ranging capabilities of large models, but they shine in focused environments where speed and efficiency matter most.

Use Cases for Small Language Models

Small language models are ideal for applications where resource constraints or latency concerns exist. Common use cases include:

- Mobile and Edge Devices: SLMs power voice assistants, predictive text, and smart IoT systems on phones and wearables.

- Real-Time Applications: Chatbots, gaming NPCs, and auto-reply systems leverage SLMs for instant responses.

- Embedded Systems: Devices with limited RAM and CPU use compact models for local data processing without the cloud.

Their small footprint enables private, secure, and fast AI processing at the edge—without needing constant internet access.

Understanding Domain Specific Language Models (DSLMs)

Unlike general-purpose models trained on massive diverse datasets, domain specific language models focus on a narrow field—like law, medicine, or finance. They are trained using domain-specific datasets, terminology, and contextual patterns.

How DSLMs Differ:

- Focused Vocabulary: Tailored understanding of industry-specific terms and phrases.

- Increased Accuracy: More reliable output in niche fields where general models fail.

- Optimized Performance: Designed for tasks like document classification, summarization, or Q&A within the specific domain.

For example, a DSLM trained on radiology reports can outperform GPT-based models in interpreting medical records or suggesting diagnoses.

Benefits of Domain Specific Language Models

- Precision and Relevance: DSLMs understand jargon and semantics that generic models often miss.

- Cost-Efficient Training: Less data needed than a general model—when starting with transfer learning.

- Enhanced Trust: Professionals are more likely to trust AI that reflects their domain’s language and standards.

Comparing SLMs and DSLMs

| Feature | Small Language Models (SLMs) | Domain Specific Language Models (DSLMs) |

|---|---|---|

| Model Size | Small | Can vary (medium) |

| Training Data | General or focused | Specific domain only |

| Performance | Efficient, low latency | Accurate in specialized tasks |

| Use Case | On-device, real-time | Niche industries, high stakes |

| Customization | Often pre-built | Requires domain data |

Both types are not mutually exclusive. An SLM can also be a DSLM if it’s compact and trained for a specific domain.

Development of SLMs and DSLMs

Creating these models involves:

- Data Preprocessing: Cleaning, normalizing, and tokenizing domain-specific text.

- Model Architecture: Using transformers like BERT, T5, or TinyML variants.

- Training Tools: Popular frameworks include Hugging Face Transformers, PyTorch, and TensorFlow.

- Fine-Tuning: Adapting pre-trained models to a new domain with fewer resources.

Challenges in Building Small and Domain-Specific Models

Despite their advantages, SLMs and DSLMs face several hurdles:

- Data Scarcity: Quality domain-specific datasets are often limited.

- Overfitting: Small models may generalize poorly if training data is too narrow.

- Evaluation: Benchmarks for specialized domains are harder to standardize.

Proper validation and ongoing feedback loops are key to maintaining quality.

Optimization Techniques for SLMs and DSLMs

To boost performance and usability:

- Quantization: Reduces model size by using lower precision data.

- Pruning: Removes less important model weights for speed.

- Transfer Learning: Adapts a general model to a specific task with minimal data.

- Knowledge Distillation: Teaches a small model using outputs from a larger one.

These methods help balance efficiency and accuracy.

Role of SLMs and DSLMs in Industry Applications

- Healthcare: Clinical NLP tools for diagnostics, medical transcription, and patient triage.

- Finance: Automating compliance checks, fraud detection, and sentiment analysis.

- Legal: Contract analysis, legal research, and discovery tools.

These models streamline expert workflows and reduce manual burdens.

Industry Case Studies of SLM and DSLM Implementation

1. Clinical Decision Support with DSLMs

A startup specializing in healthcare AI deployed a DSLM trained on radiology reports, clinical notes, and medical guidelines. The model assists doctors by flagging potential diagnoses based on symptom descriptions, increasing diagnostic accuracy and reducing workload.

2. Financial Fraud Detection Using SLMs

A fintech company implemented an SLM on its mobile banking platform. Trained with transaction data and fraud patterns, the model detects anomalies in real-time—without needing cloud processing—ensuring fast alerts with data privacy.

3. Legal Contract Review Automation

A DSLM developed for the legal industry was trained on thousands of contracts and legal case studies. It can summarize agreements, highlight risk clauses, and suggest edits, reducing the review time from hours to minutes.

Ethical Considerations and Data Privacy

As powerful as these models are, they come with ethical responsibilities.

- Bias and Fairness: Narrow training data can introduce or reinforce biases.

- Privacy Concerns: Especially in healthcare or finance, training data must comply with HIPAA, GDPR, or similar regulations.

- Transparency: Users should understand how models arrive at conclusions—especially in critical areas like law or medicine.

Adopting techniques like differential privacy and explainable AI (XAI) helps maintain trust and accountability.

Future Trends and Innovations

The landscape of SLMs and DSLMs is rapidly evolving, with exciting trends on the horizon:

- Federated Learning: Enables training across decentralized data sources without moving the data—ideal for privacy-sensitive domains.

- Cross-Domain Adaptation: Models that can switch between related domains with minimal fine-tuning.

- Open-Source Models: Growing libraries of pre-trained DSLMs in law, medicine, and tech on platforms like Hugging Face and GitHub.

Expect more collaboration between academia and industry to advance these innovations.

How to Choose Between SLMs and DSLMs

When deciding between a small or domain-specific model:

| Criteria | SLM | DSLM |

|---|---|---|

| Device Constraints | ✅ Best fit | ❌ May be too large |

| Domain Focus | ❌ General | ✅ Precise |

| Training Cost | ✅ Lower | ✅ Lower (via fine-tuning) |

| Accuracy | ❌ Lower in niche fields | ✅ High in target domain |

For mobile apps or general chatbots, SLMs are ideal. For legal or clinical NLP, DSLMs offer better accuracy.

Getting Started with SLMs and DSLMs

Beginner Resources:

- Hugging Face Transformers: For pre-trained models and fine-tuning guides

- Google’s TensorFlow Lite: Tailored for mobile model deployment

- FastAI and Scikit-learn: Easy frameworks to experiment with NLP tasks

Communities and Courses:

- Coursera and Udacity for ML/NLP specialization

- GitHub repositories and forums like Reddit’s r/MachineLearning

Small language models and domain specific language models are shaping the future of AI by making it more efficient, accessible, and accurate for specialized needs. Whether you’re building apps for mobile devices or crafting tools for professionals in medicine or law, these models offer the best of both worlds—performance and precision.

Their rise marks a shift from one-size-fits-all solutions to smart, customized AI that fits your unique use case.

FAQs about Small Language Models and Domain Specific Language Models

Q1. What is the difference between a general language model and a domain-specific one?

A general model is trained on broad, diverse datasets, while a domain-specific model is focused on a particular area like healthcare or law, improving its performance in that field.

Q2. Are small language models less accurate than large ones?

They may lack the general knowledge of large models but can outperform them in speed and efficiency, especially in focused tasks or resource-constrained environments.

Q3. Can a model be both small and domain-specific?

Yes! You can train a compact model specifically for a domain, combining efficiency with specialization.

Q4. How do I train a DSLM for my industry?

Start with a pre-trained model, gather domain-specific data, and fine-tune it using frameworks like Hugging Face or PyTorch.

Q5. What industries benefit most from DSLMs?

Healthcare, finance, legal, scientific research, and manufacturing—where terminology and context vary from general language.

Q6. Are there free tools to build SLMs and DSLMs?

Yes. Hugging Face, TensorFlow, and ONNX offer free resources, pre-trained models, and APIs.